(Inside Science) — If your cellphone rings and you answer it without looking at the caller ID, it’s quite possible that before the man or woman from the opposite ceases to finish saying “good day,” you would already understand that it has become your mom. You could also inform within a 2d whether or not she was satisfied, unhappy, indignant, or worried.

Humans can naturally apprehend and perceive other people via their voices. A new look posted in The Journal of the Acoustical Society of America explored how precisely people can try this. The results may help researchers lay out more efficient voice reputation software in the future.

The Complexity of Speech

“It’s a crazy problem for our auditory gadget to remedy — to figure out how many sounds there are, what they’re, and in which they may be,” stated Tyler Perrachione, a neuroscientist and linguist from Boston University no longer worried in the examine.

Nowadays, Facebook has little hassle identifying faces in photographs, even when a face is provided from one-of-a-kind angles or beneath exceptional lighting fixtures. Today’s voice recognition software is a good deal greater constrained in comparison, in line with Perrachione. That may be related to our lack of know-how about how humans are capable of perceiving voices.

“We humans have one-of-a-kind speaker models for distinct people,” said Neeraj Sharma, a psychologist from Carnegie Mellon University in Pittsburgh and the lead writer of the current take a look at. “When you concentrate on a communique, you turn among different fashions to your brain so that you can understand each speaker better.”

People broaden speaker fashions of their brains as they are uncovered to one-of-a-kind voices, taking into account subtle variations in features along with cadence and timbre. By switching and adapting among distinctive speaker models based totally on who’s talking, human beings learn to identify and apprehend the one-of-a-kind audio system.

“Right now, voice reputation structures don’t recognize on the speaker issue — they basically use the identical speaker model to research the entirety,” stated Sharma. “For example, while you talk to Alexa, she uses the same speaker model to investigate my speech versus your speech.”

So let’s say you have got a rather thick Alabamian accent — Alexa may think you are announcing “cane” whilst you are trying to mention “can’t.” “If we can understand how people use speaker-based models, then perhaps we will educate a device system to do it,” said Sharma.

Listen and Say ‘When’

In the new study, Sharma and his colleagues designed an experiment wherein a collection of human volunteers listened to audio clips of similar voices talking in flip and had been asked to discover the precise moment one speaker took over from the previous one.

This allowed the researchers to discover the connection between certain audio capabilities and the human volunteers’ reaction time and fake alarm price. They then began to decipher what cues humans concentrate to suggest a speaker change.

“Currently, we don’t have lots of exceptional experiments that allow us to observe talker identification or voice popularity, so this test layout is without a doubt pretty clever,” said Perrachione.

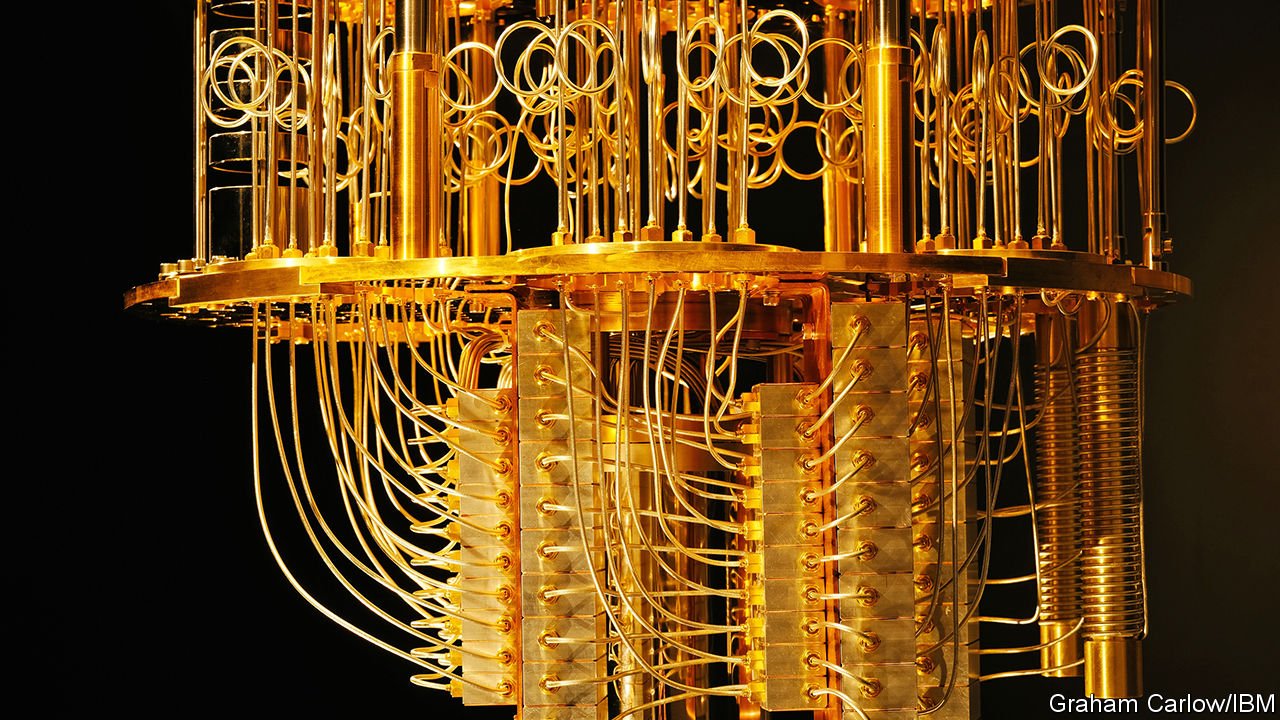

When the researchers ran the equal check for numerous one-of-a-kind kinds of modern-day voice reputation software programs, including one commercially to be had software evolved using IBM, they observed that the human volunteers constantly accomplished better than all the examined software programs expected.

Sharma said they are making plans to look at the mind pastime of human beings listening to unique voices using electroencephalography, or EEG, a non-invasive technique for monitoring brain activities. “That may assist us in examining further how the brain responds while there’s a speaker alternate,” he said.